Producing

Within the field: the last word newbie’s information to digital audio

It’s fully potential to make music on a pc with out having the faintest thought of how digital audio works, however a bit of information can go a great distance in the case of pushing the medium to its limits.

For these unfamiliar with the fundamentals of how sound works, let’s begin at sq. one. A few of the following explanations may appear a bit of summary out of the context of precise mixing, however introducing these concepts now could be essential, as they’ll be referenced once we get extra hands-on later within the situation.

Sound is the oscillation (that’s, waves) of strain by way of a medium – air, for instance. When these waves hit our tympanic membranes (eardrums), the air vibration is transformed to a vibration within the fluid that fills the channels of the internal ear. These channels additionally host cells with microscopic ‘hairs’ (known as stereocilia) that launch chemical neurotransmitters when pushed onerous sufficient by the vibrations within the fluid. It’s these neurotransmitters that inform our mind what we’re listening to.

The method of recording audio works by changing the strain waves within the air into {an electrical} sign. For instance, once we (used to) file utilizing a microphone feeding right into a tape recorder, the transducer within the mic converts the strain oscillation within the air into {an electrical} sign, which is fed to the tape head, which polarises the magnetic particles on the tape working over it in direct proportion to the sign.

The motion of sound by way of air – and, certainly, the sign recorded to tape – is what we’d name an analogue sign. That signifies that it’s steady – it strikes easily from one ‘worth’ to the subsequent with out ‘stepping’, even below essentially the most microscopic of scrutiny.

By the numbers

Computer systems are, for essentially the most half, digital, which suggests they learn and write info as discrete values – that’s, a string of numbers. So, how do computer systems flip a easy waveform of infinite decision right into a collection of numbers that they will perceive?

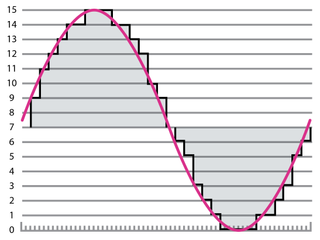

The reply is a technique known as pulse code modulation (PCM), which is the principle system employed when working with digital audio info. It begins with the method of analogue-to-digital conversion (ADC), which entails measuring the worth of the continual sign at common intervals and making a facsimile of the unique waveform. The upper the frequency at which the sign is referenced – or ‘sampled’ – and the larger the precision of the worth that’s recorded, the nearer to the unique waveform the digital recording might be (see Fig. 1).

The variety of samples taken per second is known as the sampling price. CD-quality audio is at a sampling price of 44.1kHz, which means that the audio sign is sampled a whopping 44,100 occasions per second. This provides us a reasonably easy illustration of even excessive frequencies – the upper the frequency we need to measure precisely, the upper the sampling price required.

That determine – 44.1kHz – is fairly particular, and there’s a purpose for that: the best frequency that may be represented by PCM audio is strictly half of the pattern price, and the human ear can hear frequencies from round 20Hz to 20kHz, so on the high finish that’s 20,000 oscillations (or cycles) per second. That is the place we encounter the Nyquist–Shannon sampling theorem, which offers with how usually a pattern of a sign must be taken for it to be reconstructed precisely.

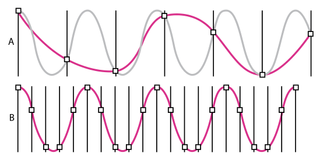

The concept states that the sampling price must be double the best frequency, lest what’s generally known as aliasing happens (see Fig. 2). Aliasing causes unmusical artefacts within the sound, as frequencies increased than the pattern price might be “mirrored” across the Nyquist frequency, which is half the pattern price. So at 44.1kHz, Nyquist is 22,050Hz, and if we attempt to pattern, say, 25,050Hz (22,050Hz plus 3000Hz), we’ll as an alternative get 19,050Hz (22,050Hz minus 3000Hz). As frequencies above 20kHz or so can’t be perceived by the human ear, they’re not massively helpful anyway.

In ADC, what’s generally known as an anti-aliasing filter is used to band-limit the sound, stopping any frequency increased than half the sampling price from getting by way of. So, that 44.1kHz price offers us double our 20kHz, plus a bit of additional room for the low-pass filter to filter out the undesirable high-end sign. 44.1kHz was the de facto digital playback commonplace by way of the CD period, though a lot increased pattern charges at the moment are widespread.

As soon as our pattern has been taken, it must be saved as a quantity. You in all probability already know that CD high quality audio is 16-bit – however what, precisely, is a bit? A bit (quick for binary digit) is essentially the most basic unit of knowledge in computing, and might have one in every of two values – normally represented as 1 or 0. If we add one other bit, we double the variety of out there states: for 2 bits, the variety of out there states could be 4 (two to the facility of two) – that’s, 00, 01, 10 and 11.

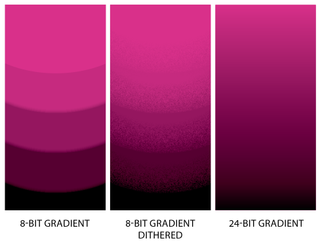

4 bits would permit for 16 states (two to the facility of 4), eight bits would permit for 256 states (two to the facility of eight), and 16 bits a large 65,536 potential states (two to the facility of 16). So, we’ve gone from a primitive ‘on or off’ state at 1-bit to having fairly a nice decision at 16-bit. See Fig. 3 for a visible illustration of this.

A bit random

When an analogue-to-digital converter samples an analogue sign and rounds it up or right down to the closest out there stage, as dictated by the variety of out there bits, we get ‘quantisation error’ or ‘quantisation distortion’. That is the distinction between the unique analogue sign and its digital facsimile, and might be thought-about an extra stochastic sign generally known as ‘quantisation noise’. A stochastic sign is non-deterministic, exhibiting totally different behaviour each time it’s noticed – a random sign, in different phrases.

So, in a digital recording, we get our ‘noise flooring’ (the smallest measurement we are able to take with any certainty) from the variety of bits we’re utilizing. This is named ‘phrase dimension’ or ‘phrase size’. For each bit we add to the phrase size, we get twice the variety of potential states for every pattern, doubling the dynamic vary with one other 6dB or so of headroom over the noise flooring.

For instance, a 16-bit digital recording at CD high quality offers us a hypothetical 96dB of dynamic vary. It’d shock you to study that that is really increased than the dynamic vary of vinyl, which is extra like 80dB. If you happen to’re unfamiliar with decibels and dynamic vary, don’t fear – we’ll come again to them shortly.

As you may see by wanting on the similar pattern at totally different bit depths, altering bit depth doesn’t have an effect on how loud a pattern can go; it simply impacts the amount decision of the waveform. A typical false impression is that rising bit depth solely improves the decision at decrease quantity ranges, however this clearly illustrates that the rise in decision improves the accuracy of the sign throughout all the amplitude.

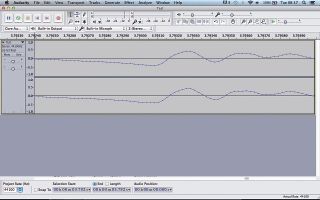

If you happen to’re new to music manufacturing and this all appears very summary, maybe a sensible instance will convey issues into focus. Set up the free audio editor Audacity, launch it and set the enter to your laptop’s built-in microphone. Click on the file button, and repeatedly communicate the phrase “take a look at” into the microphone, quietly at first, then getting louder every time. It is best to see one thing just like the picture beneath: a collection of comparable waveforms that enhance in dimension on the vertical axis.

Testing, testing

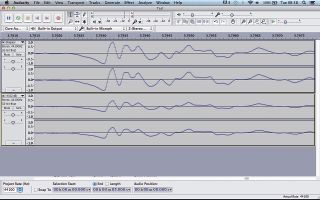

Let’s check out our recording up shut. Click on the primary “take a look at”, then maintain the Cmd key on Mac or Ctrl on PC and press 1 to zoom in on the waveform. The waveform will look steady, however when you’ve zoomed in shut sufficient, you’ll see discrete factors representing the degrees of particular person samples – see Fig. 5.

Maintain Cmd/Ctrl once more and press 3 to zoom out and see all the waveform. The rise in vertical dimension represents the phrases rising in quantity, or amplitude. Now click on the loudest “take a look at” and zoom in on it. On the loudest factors, the waveform will strategy and even attain the +1.0/-1.0 factors of the vertical axis. It doesn’t matter what bit depth you utilize, +/-1 is the loudest stage your recorded sign might be.

Nevertheless, the larger the bit depth, the extra correct the waveform, and that is significantly essential when coping with alerts that comprise each loud and quiet parts – percussive sounds, for instance. It is because extra bits give us extra dynamic vary.

Dynamic vary is the ratio of the biggest and smallest potential values of a variable equivalent to amplitude, and it may be expressed by way of decibels (dB), which we’ll get into in additional element later. In easy phrases, it’s the distinction in dB between the loudest and softest components of the sign.

Dynamic vary is the ratio of the biggest and smallest potential values of a variable equivalent to amplitude

Right here’s an instance of dynamic vary in motion that almost all of us might be aware of: you’re watching a film late at evening, making an attempt to maintain the amount at a stage that isn’t going to disturb anybody. The difficulty is, the scenes by which the characters speak to one another are too quiet, whereas the scenes with weapons and explosions are too loud! Wouldn’t it’s higher if the film’s soundtrack was at a constant quantity stage?

Effectively, for late-night dwelling viewing, possibly; however in a cinema, it’s the distinction in amplitude between the characters’ dialogue and the explosions that offers the latter their impression. As such, having a broad dynamic vary is essential in movie soundtracks, in addition to classical music, the place you’ll get a lot quieter sections than you ever would in a dance monitor. For instance, take a hearken to the fourth motion of Dvořák’s New World Symphony beneath, which options very delicate, restrained sections and intense crescendos. Dynamic vary is essential in membership music too, although, as a result of it helps preserve the percussive sounds punchy.

Vary finder

The significance of dynamic vary turns into very obvious when compressing drum sounds. For the uninitiated, compression is a course of whereby the dynamic vary of a sign is diminished to get a sound with a louder common stage. As soon as the extent of the sign exceeds a sure quantity, the quantity it goes over that threshold is diminished by a ratio set by the consumer.

To compensate for the general drop in quantity that this introduces, the compressor’s output is then boosted in order that it peaks on the similar stage because the uncompressed sign. As the common stage is now increased, because of the discount in dynamic vary, it sounds louder – see Fig. 6.

Nevertheless, an excessive amount of compression can suck the life out of a sound, making it really feel flabby and weak. Getting the correct steadiness between sounds with punch, but in addition sustaining excessive common quantity, is among the most essential points of blending for membership play.

Typically talking – and up to some extent – the louder music will get, the higher it sounds. Sadly, as we’ve seen, our waveform can solely get so loud: as soon as all of the bits have been set to 1 and we’ve reached the 65,536th step, there’s nowhere else for the audio info to go! So, what occurs if we’re recording a sign that’s too ‘sizzling’ (that’s, too loud) for our system?

On this state of affairs we get what’s generally known as digital clipping. When a sign exceeds the utmost capability of the system, the highest of the waveform is solely minimize off, or ‘clipped’. This lack of info misrepresents the amplitude of the clipped waveform and produces misguided increased frequencies. Though clipping can have its makes use of – which we’ll take a look at later within the situation – it’s usually undesirable which explains why we are able to’t simply preserve boosting the extent of our mixes to get them as loud as we would like.

As a music connoisseur, you’ve in all probability already seen that some mixes really sound rather a lot louder than others. Typically it is because their waveforms merely peak at the next stage, however not all the time – you would possibly load up two tracks in your DAW and see that they give the impression of being related by way of amplitude, however hear that one sounds rather a lot louder than the opposite.

That is the place perceived quantity comes into play. The human thoughts’s notion of loudness relies not simply on the amplitude stage at which a waveform peaks, but in addition on how lengthy it stays that loud. Consequently, a greater option to quantify the perceived quantity of a sound is by measuring not its peak quantity, however its RMS stage.

RMS stands for ‘root imply sq.’, and is a technique of calculating the common amplitude of a waveform over time. Say, for instance, we attempt to calculate the imply amplitude of a sine wave. The result’s going to be zero, it doesn’t matter what its peak amplitude, as a result of a sine wave rises and falls symmetrically round its centre origin.

Clearly, then, this isn’t a helpful option to measure common amplitude. RMS (the sq. root of the imply of the squares of the unique values) works higher as a result of it all the time offers a optimistic worth by negating any negatives – it’s a measure of the magnitude of a set of numbers. So, a sign that has been compressed might have the identical peak stage because the uncompressed model, however its RMS stage might be increased, making RMS a greater indicator of perceived quantity.

Talkin’ loud

After we discuss sign ranges, we use a logarithmic unit generally known as the decibel, or dB for brief. Decibels characterize the ratio between two totally different values, so we use them to explain amplitude comparatively. For instance, in digital audio, a sign at 0dBFS (0 decibels full scale) reaches the utmost potential peak stage (+/-1.0) on the vertical axis.

Placing it merely, +6dB doubles the amplitude of a sign, and -6dB halves it. If you wish to get technical, it’s really roughly +/-6.02dB (derived from 20 log 2). So, if we had a sine wave that peaked at 0dBFS, and amplified it by -6.02dB, it will peak at about +/-0.5. Every time we amplify the sign by -6.02dB, the height stage will fall by roughly half once more. By way of RMS, amplifying a sign by +/-6.02dB will give us 4 occasions the facility or 1 / 4 of the facility respectively (see Fig. 7).

We calculate a interval’s crest issue (the extremity of peaks in a waveform) by dividing its peak worth by its RMS worth. The ensuing dB worth will give us some thought of how dynamic a sign is. You may measure peak, RMS and crest issue dB utilizing metering software program equivalent to Blue Cat Audio DP Meter Professional. Merely put it in your grasp channel, and as your monitor performs it’ll replace the height and RMS averages (the time settings of which might be adjusted) and ensuing crest think about actual time.

So, a part of the explanation why two tracks can have such totally different perceived quantity ranges after they peak on the similar stage is that they possible have the next RMS worth. That’s not the entire story, although. There are lots of psychoacoustic components that have an effect on how loud one thing sounds to our ears, together with its frequency content material, how lengthy it lasts and, crucially, its context in a monitor.

Within the combine

That is the place we really get into the realms of association and – lastly! – mixing. On the most basic stage, mixing two waveforms collectively is a very easy course of: all of your CPU has to do is add the extent of every pattern state collectively to calculate the entire.

This occurs in precisely the identical approach in all DAWs, and if somebody tells you {that a} explicit DAW’s summing engine has a sure ‘sound’ to it, they’re sadly misinformed. Actually, there’s a quite simple take a look at you are able to do to see for your self how all DAWs sum alerts in precisely the identical approach: merely load two or extra audio tracks into the DAWs you need to take a look at and bounce out a mixdown.

So long as you utilize the identical settings (together with any automated fades, panning regulation and dithering) and haven’t executed something to change the audio (equivalent to apply timestretching), you’ll discover that every DAW produces an equivalent file. Then load the exported information right into a DAW and apply some polarity (usually erroneously known as part) inversion – so long as all of the information have been imported appropriately and no results have been utilized by the DAW, they’ll cancel one another out completely, leaving nothing however silence.

One other factor price taking into account about DAW mixers is that they use 32- or (now a lot extra generally) 64-bit ‘floating level’ summing to calculate what the combo ought to sound like earlier than it’s both delivered to your ears through your audio interface and audio system or bounced to an audio file.

Floating level signifies that a number of the bits are dedicated to describing the exponent, which in layman’s phrases signifies that it may be used to retailer a a lot wider array of values. Whereas fixed-point 16-bit has a variety of 96dB, 32-bit floating level has a variety of greater than 1500dB!

That is clearly extra headroom than we’d ever have any sensible use for, which explains why pushing the extent faders extraordinarily excessive or low in your DAW’s mixer doesn’t actually have a adverse impact on the sound until it’s passing by way of a plugin that doesn’t cope properly with extraordinarily excessive or low values, or is clipping the grasp channel.

Actually, you possibly can run each channel in your mixer properly into the purple, however so long as the grasp channel isn’t clipping, no distortion of the sign would happen: 32-bit float can deal with virtually something you may throw at it.

To get your mixes sounding pretty much as good because the competitors it’s essential to push your digital audio to its very limits

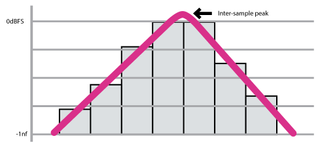

Operating your DAW’s grasp channel into the purple is a nasty thought, as a result of even when it’s not producing any audible distortion, you gained’t actually be capable to belief what you’re listening to any extra; this is because of the truth that totally different digital-to-analogue converters deal with alerts exceeding 0dBFS in a different way. Distortion may even be brought on by alerts that don’t clip!

This is named inter-sample modulation, and it doesn’t happen inside your DAW – it solely occurs as soon as the sign has been transformed from digital to analogue by your audio interface, particularly the digital-to-analogue converter’s (DAC’s) reconstruction filter. It’s what turns a stepped digital sign right into a easy analogue one, and as Fig. 8 (above left) exhibits, this course of may cause issues when used with particularly loud digital alerts.

At any time when audio leaves your DAW – be it as sound or an audio file export – it’s transformed from a 32- or 64-bit sign to a 16- or 24-bit one. When this occurs, decision is misplaced as much less bits are used. That is the place an oft-misunderstood course of generally known as dithering comes into play, whereby a really low stage of noise is added to the sign to extend its perceived dynamic vary.

It really works a bit of like this: think about you need to paint a gray image, however solely have white and black paint. By portray very small squares of alternating white and black, you may create a 3rd color: gray. Dithering makes use of the identical precept of quickly alternating a bit’s state to create a seemingly wider vary of values than would in any other case be out there. See Fig. 9 for a visible instance of dithering in motion.

Dithering needs to be utilized when reducing the bit price. It’s due to this fact smart to use dither to your 32- or 64-bit combine when exporting it as a 16-bit WAV file to play in a membership, or when supplying a 24-bit WAV file to a mastering engineer.

WAV and AIFF are uncompressed audio codecs, which signifies that they’re appropriate for utilizing to switch audio information between DAWs and audio enhancing purposes. Compressed audio codecs equivalent to MP3 and M4A have the benefit of a lot smaller file sizes, however use psychoacoustic tips to take away information from the sign that the human ear finds onerous to understand.

As such, these ‘lossy’ codecs nonetheless sound nice below regular client listening circumstances, however they don’t behave precisely like their uncompressed counterparts when subjected to processing. Whereas utilizing an MP3 file as a supply for, say, a grasp isn’t essentially going to sound unhealthy, the outcomes will actually rely upon the fabric, the context it’s utilized in, and the encoding algorithm that’s been used to compress the sound. To ensure that the completed product sounds pretty much as good as potential, it pays to make sure that you utilize the highest-quality supply materials you may.

Transferring on

The human ear and thoughts are surprisingly delicate listening gadgets, and, because of this, it’s straightforward for somebody who is aware of nothing about manufacturing to establish a nasty or weak combine – it should merely sound much less ‘good’ than different tracks.

That is particularly essential in a membership surroundings, the place the music is normally delivered in a continuous stream, blended collectively by a DJ. When the DJ makes use of the crossfader to shortly minimize between channels on the mixer, it’s similar to A/B-ing tracks in a studio surroundings: any deficiencies within the mixdown of both monitor in comparison with the opposite might be obviously apparent!

To get your mixes sounding pretty much as good because the competitors it’s essential to push your digital audio to its very limits, and it’s solely by way of understanding what these limits are that you just can obtain this.